Occasionally I have ideas that I struggle to execute on. Usually, this failure to execute is because of either my own laziness or finding an excuse to not follow through because somebody else has already done something. Yesterday, neither of those past problems prevailed and I followed through on a tiny proof of concept project that’s not useful for much other than my own edification. Behold, my Pi Cluster!

This is something that has been done many times over at this point, but I figured I’d mess with it a bit to create a docker swarm cluster on bare metal and establish a sort of configuration management setup with Ansible.

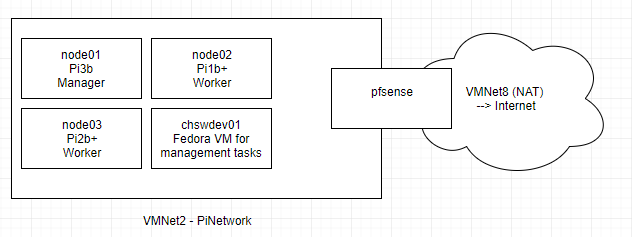

The network layout is pretty boring. There are 3 nodes with a Fedora VM mostly to simplify accessing all of the pis with Ansible or ssh. The PiNetwork is connected to the rest of the world via the VMware Workstation NAT network (VMNet 8).

To start, I hadn’t committed to Ansible yet, so I used etcher to make 3 Raspbian SD cards. Then, I configured the hostname on each Pi and updated them on my regular LAN. Realizing how simple it could be to throw together a network with a few extra features using pfSense, I moved forward. I created a new SSH key pair just for this project and moved ahead with copying the public key to each hosts’ authorized_keys file.

As you’ve probably noticed, I didn’t document the process so some of this explanation is without imagery 🙁 Anyway, next I installed docker using the script from get.docker.com on each node. Something like the line below is nestled away in my bash history.

curl -sSL https://get.docker.com | sh

After docker was up and running (which admittedly took a bit), I ran the hello-world container on each host and all was well. Next up was creating and joining the swarm which is super easy. The command on node01 was docker swarm init. That generates a key to use to join the rest of the nodes to the cluster after running on the other hosts we’re off to the races.

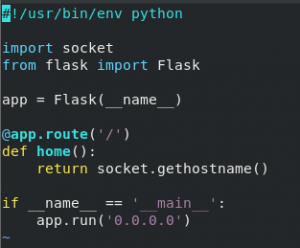

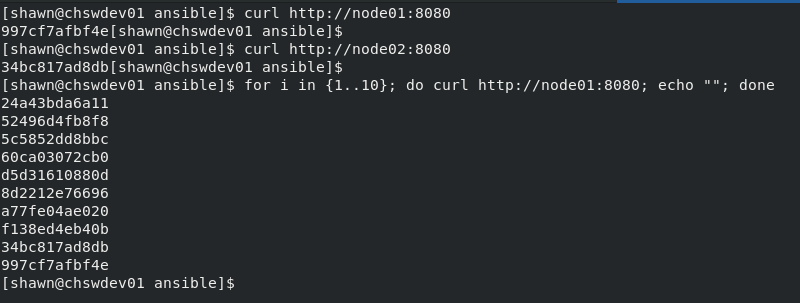

To demonstrate that the cluster is working, I put together a quick flask app to return the hostname of the host it’s running on, or in this case the container.Here’s that script in all its glory.

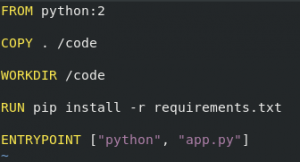

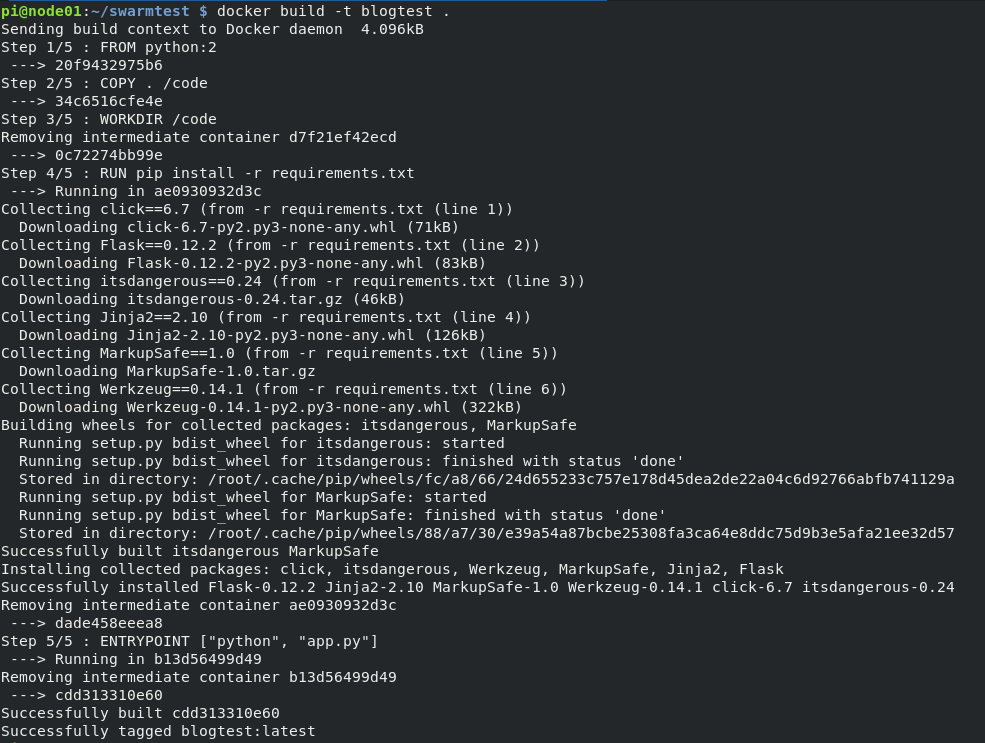

Before we go further note that you’ll need to find compatible container images to start from. Ones I’ve tried that exist are the base Ubuntu image, hello-world, and python:2. I’m not looking for much right now, just proving that this concept works, so since python:2 works, we shall surge ahead with a simple dockerfile to pull python:2, add our app and requirements.txt to it, install the requirements, and set the container’s entrypoint to our app.

A quick attempt to build that container works, but we must build the container on one of the pis so that we have an arm base image instead of intel. I’d put screenshots here but everything is cached so there isn’t much to see. Following copying the files to node01, we’re able to build our container for arm. (Still cached, so no screenshot). Now, we need to share this image via a local registry so we can access it from within the swarm. In a proper setup, we would have a trusted TLS cert and our docker workers would be happy as could be. But proper is a stretch when you’re describing a project of mine, so I needed to set the insecure registries flag when starting the docker daemon. Time for some Ansible practice.

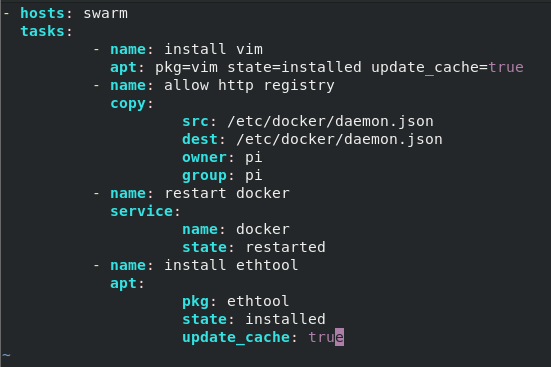

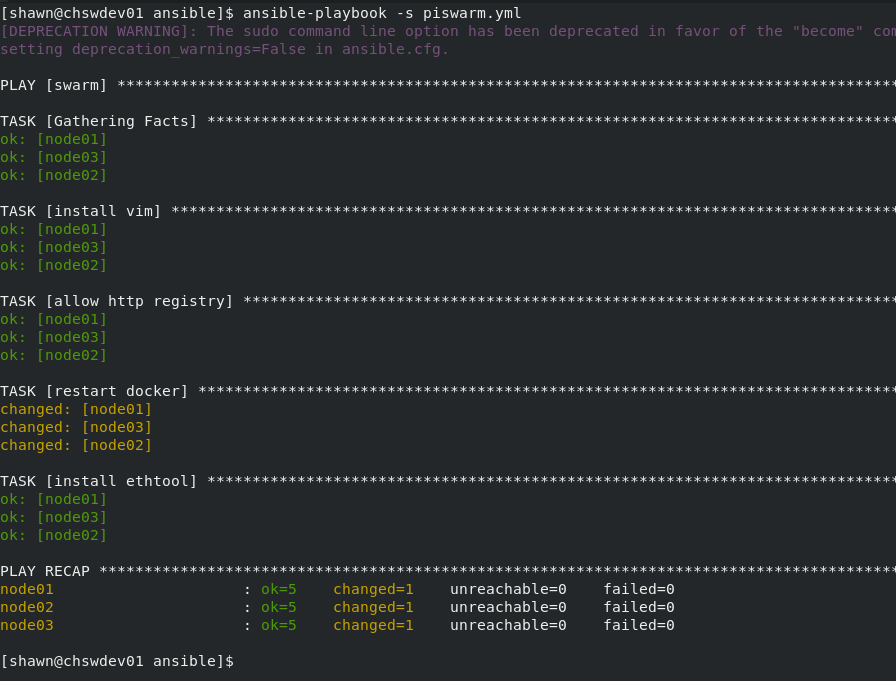

To start, go read anybody else’s guide on how to use Ansible. I just stumbled through some documentation and ended up with this mess. Hosts are defined in /etc/ansible/hosts by hostname (node01, node02, node03). This little disastor installs vim, copies the /etc/docker/daemon.json file from localhost (that is configured for insecure registry), restarts docker, and finally installs ethtool. To be correct, docker should only be restarted if the daemon.json file is updated, but this was a first shot so I’m leaving it for now.

Despite it not being “right”, this will work for now and successfully connects to and validates or changes the hosts. (Note: probably should update to use the become command line arg instead of -s)

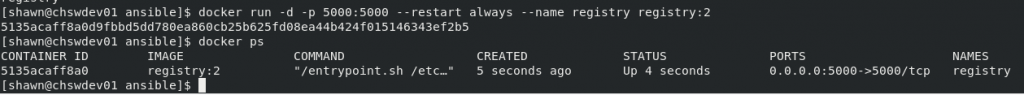

Now, we can push and pull from insecure registries (specifically our local one). The problem is, we don’t yet have a local registry. So I quickly put one up on my development box. That’s definitely not a best practice, but it works for now and doesn’t cost me any overhead on my pis.

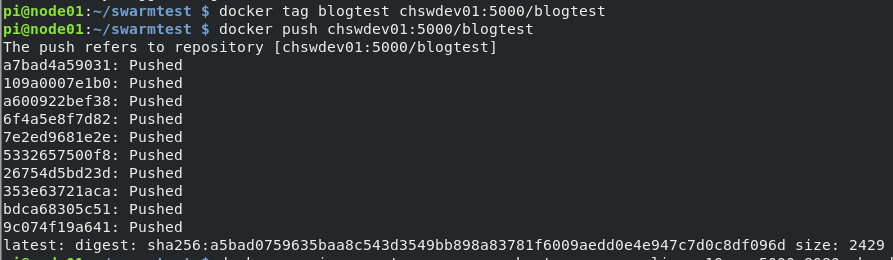

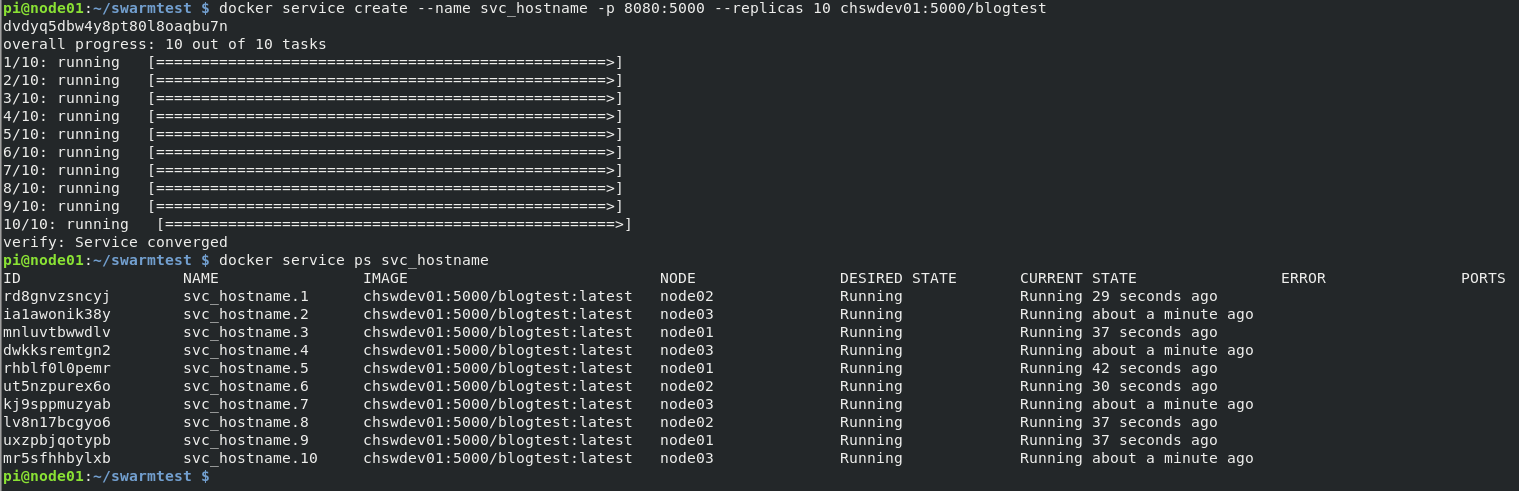

That’s done. Now all of our swarm nodes (workers and managers) can access a registry on the LAN so we’ll push our arm container from node01 to the registry, create a service with that container (prompting the workers to download it), and test. I apologize for the additional images ahead, I’ll have a better solution to sharing text in the future.

Because of how swarm’s default networking is configured, no matter which node we access we get the next container in a round robin fashion. You get the idea with some requests below. Note that the container ID is different from the service replica id.

In summary I have a docker swarm cluster on raspberry pis managed partially by Ansible. Useful? TBD. A good way to the pass the time on a Sunday? Yes.

Until next time,

Shawn